Merry Christmas and a Happy New Year to you. Hope you had some time off and a relaxing break. I certainly did while playing a lot of

BlazeRush with my kids. BlazeRush has a solid frame rate, excellent effects, great feeling dynamics and it's easy to play. The

devs just added some of the best VR support I've seen in a commercial title too. It's great value for money on Steam, go get it.

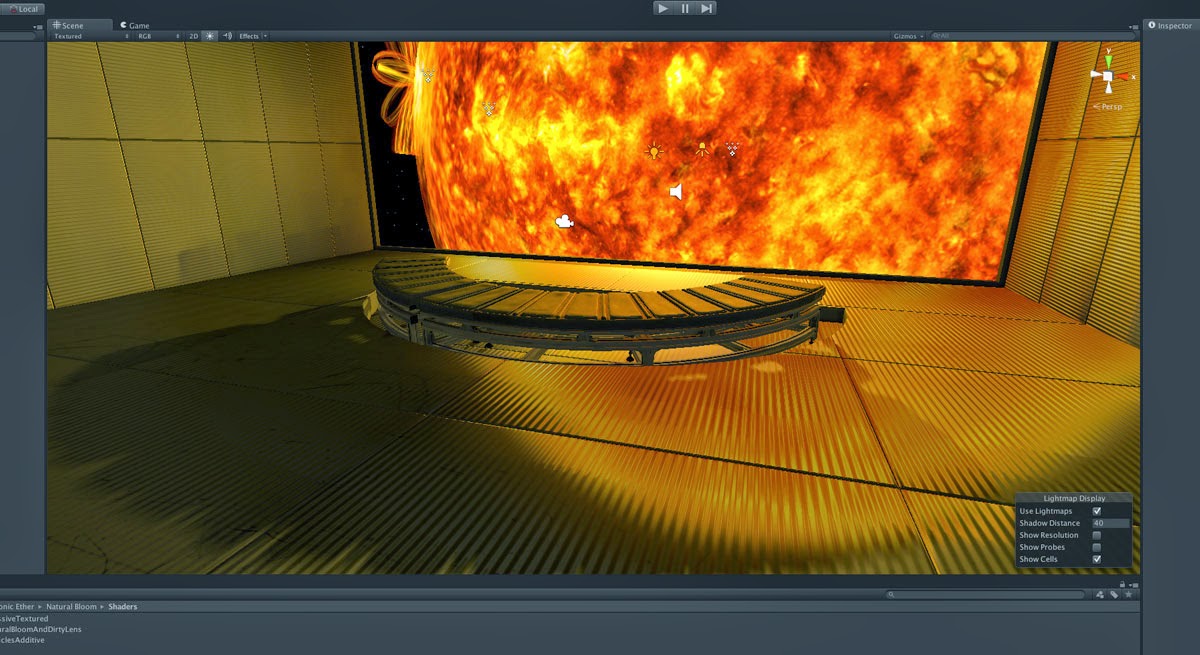

This post will cover my discoveries about the difference between

deferred rendering and

forward rendering in Unity and how alpha transparencies forced me to learn a thing or two, plus some screen shots of where the sunshine observation deck is at. Things are nearing the time when I'll release it to the community as I'm itching to start another idea I had. It's a bit technical, but there's nice pictures at the end.

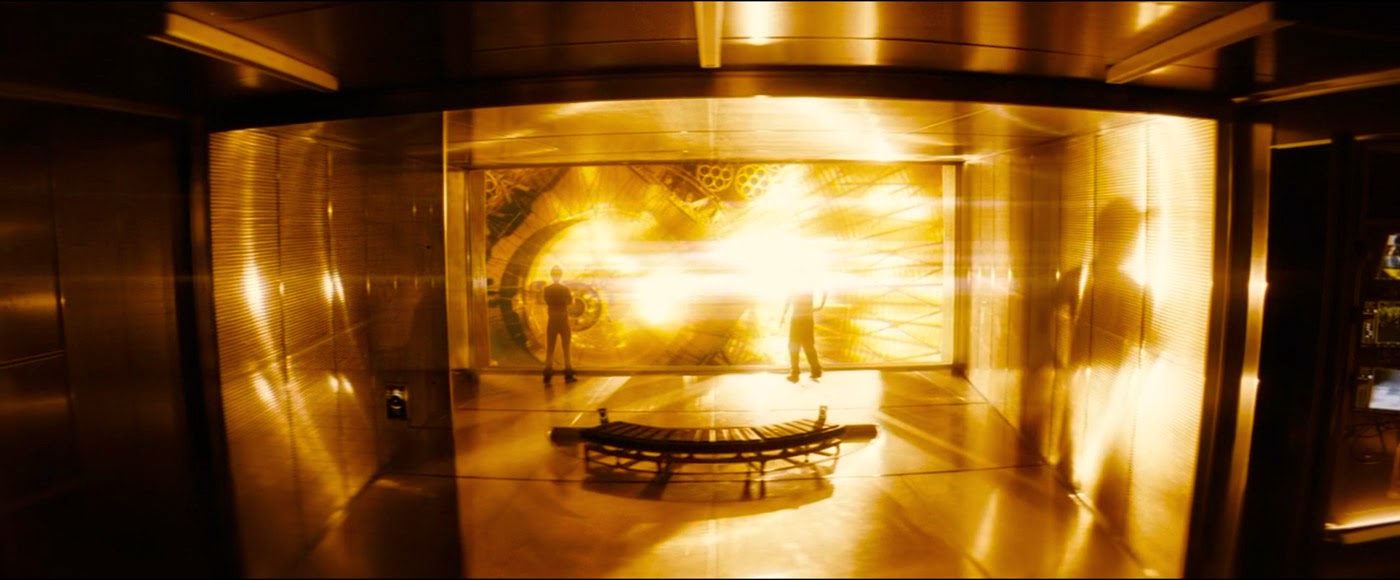

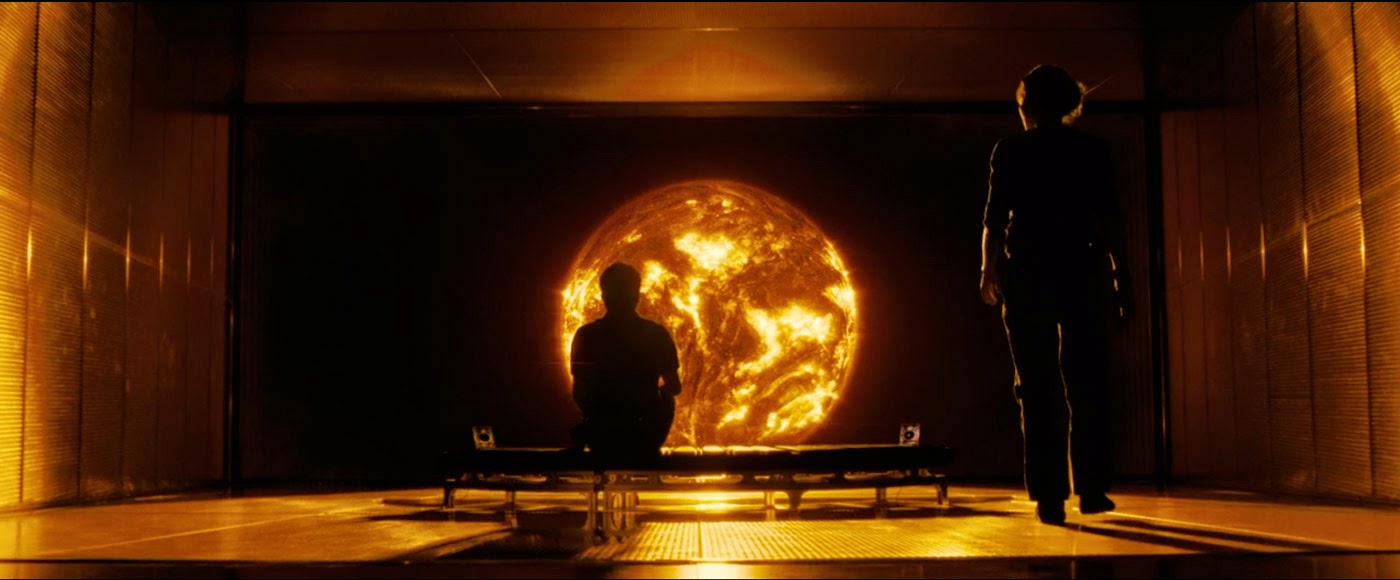

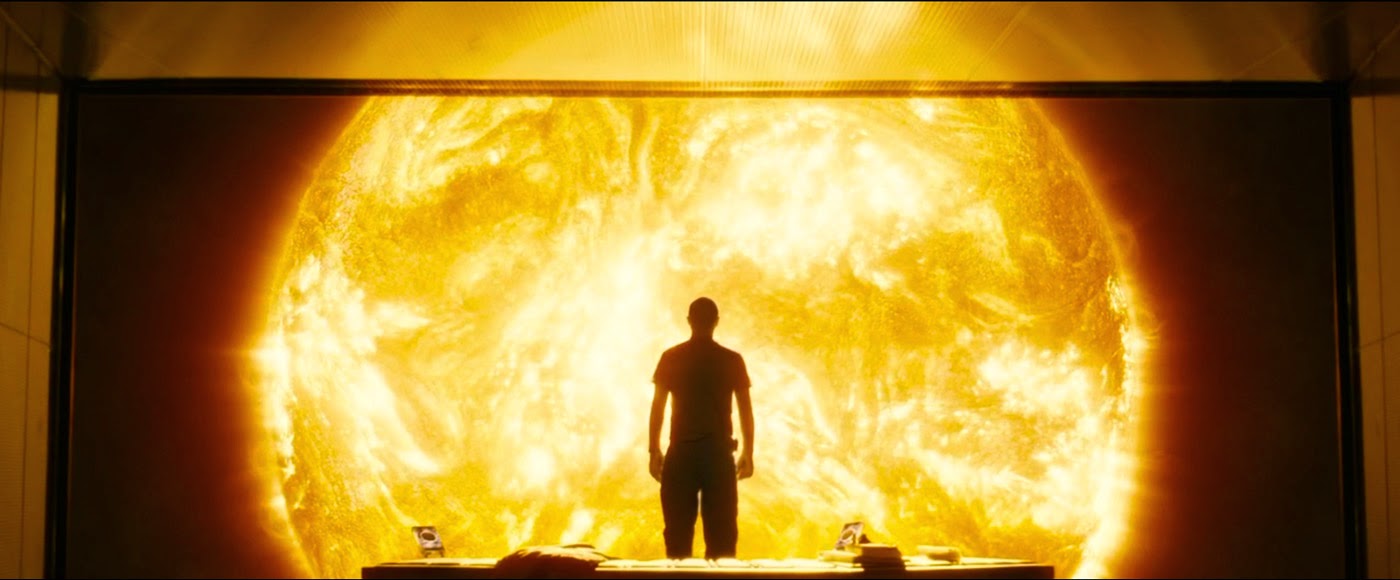

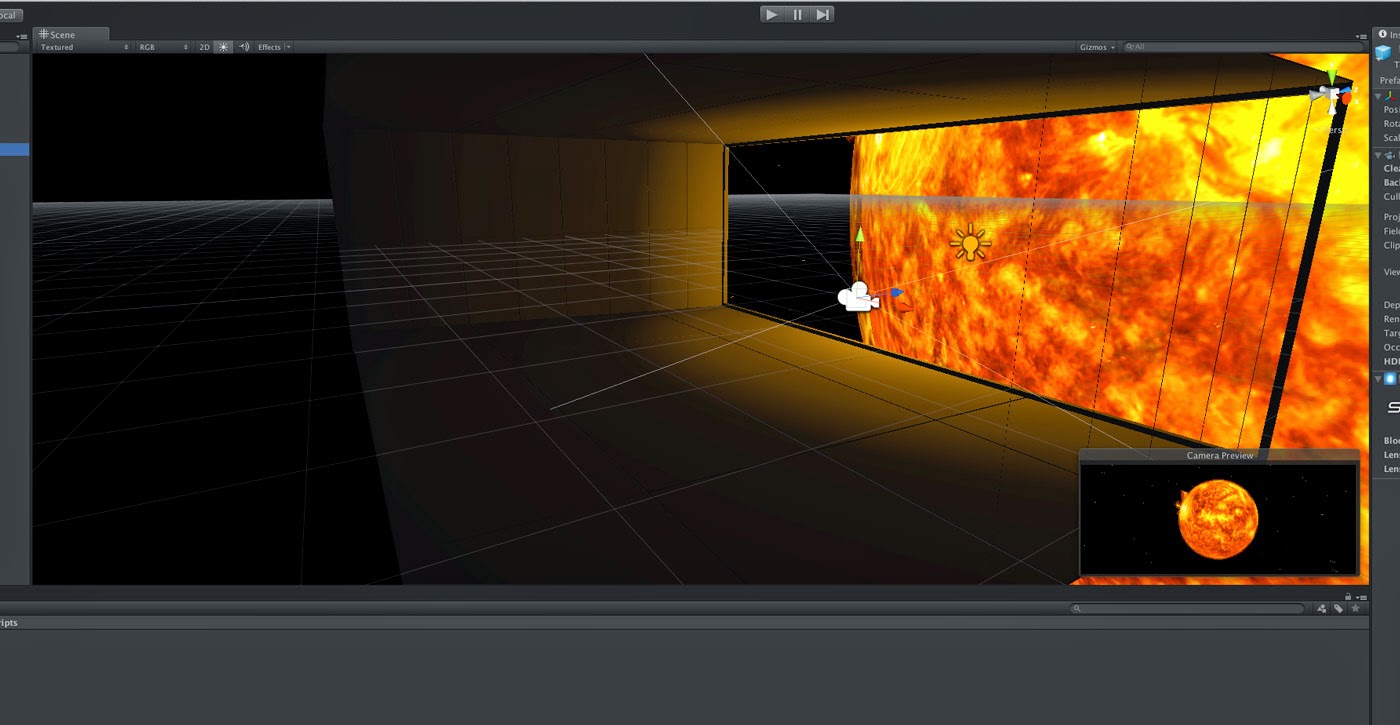

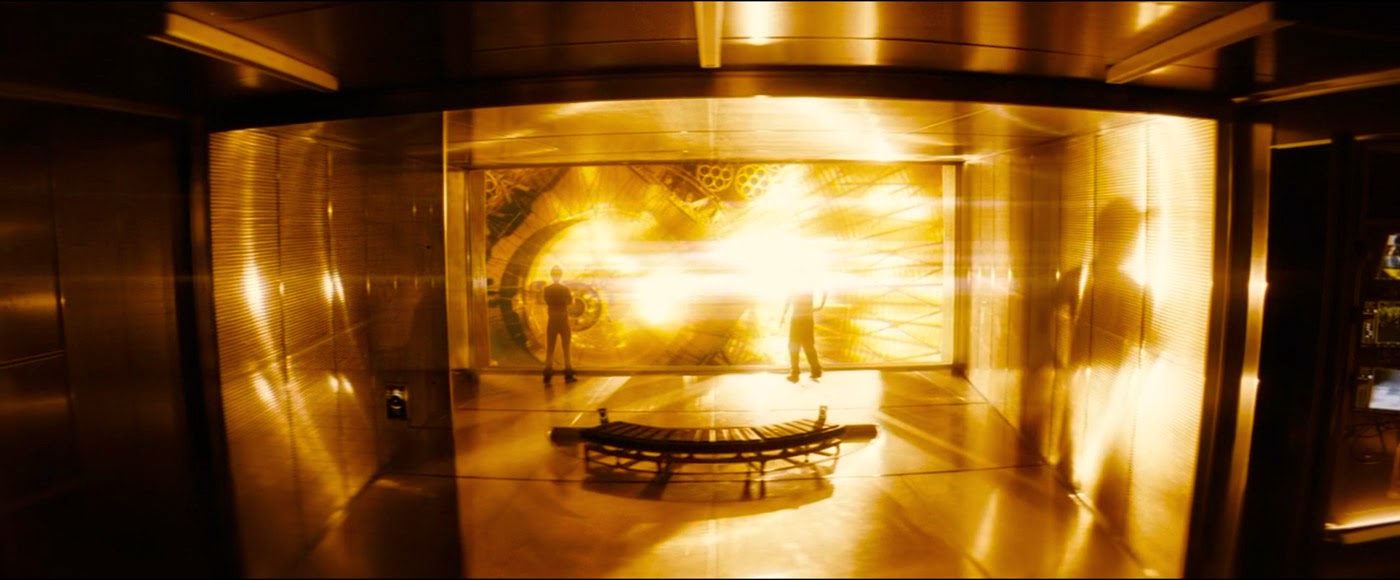

In the last post I talked about physically-based rendering and cubic environment maps, shortly after which I needed to create the transparent, alpha-mapped smoked-glass window you can see in the frame grabs from the movie below:

|

| Smoked-glass sliding door screen-left. |

|

| More smoked-glass, this time from inside the science lab. |

As a new Unity user, [n00b], I had previously switched on deferred-rendering in the graphics pipe [cause it sounded great], only paying scant attention to the documentation mentioning that deferred rendering supports a multitude of dynamic lights. I thought 'Oh yeah, dynamic lighting, that's something I'm going to want for sure', and completely ignored the part that mentions transparent objects must be rendered in forward rendering mode.

I happily proceeded to create the sliding door geo, apply UnityPro's refractive shader and watch my frame rate plunge down to sub 60fps as I approach the glass.

Oh noes!!! The horror! I resigned myself to the fact that I probably couldn't afford this cool looking effect with my GPU [nVidia GTX 680MX] and swapped the Pro refraction shader for a plain alpha-mapped transparency shader. So sad. However, I remembered that for achieving a sense of presence, Oculus recommend a high frame rate over visual fidelity.

|

| So money. I must have it at all costs. |

But when I previewed the alpha-mapped transparency shader in the Rift the frame rate still took a nosedive when I approached the glass. So the transparency itself was the cause of the problem and not the refraction shader? But why should transparency cause such a hit to the GPU?

There are two main problems and the answer lies in the order in which the objects I'm asking Unity to draw are rendered. I risk stuffing both feet in my mouth trying to explain this as I do not have OpenGL coding experience nor a computer science background, but here goes a quick summary.

And here's a link to a great page describing both forward and deferred rendering modes if you'd like more info.

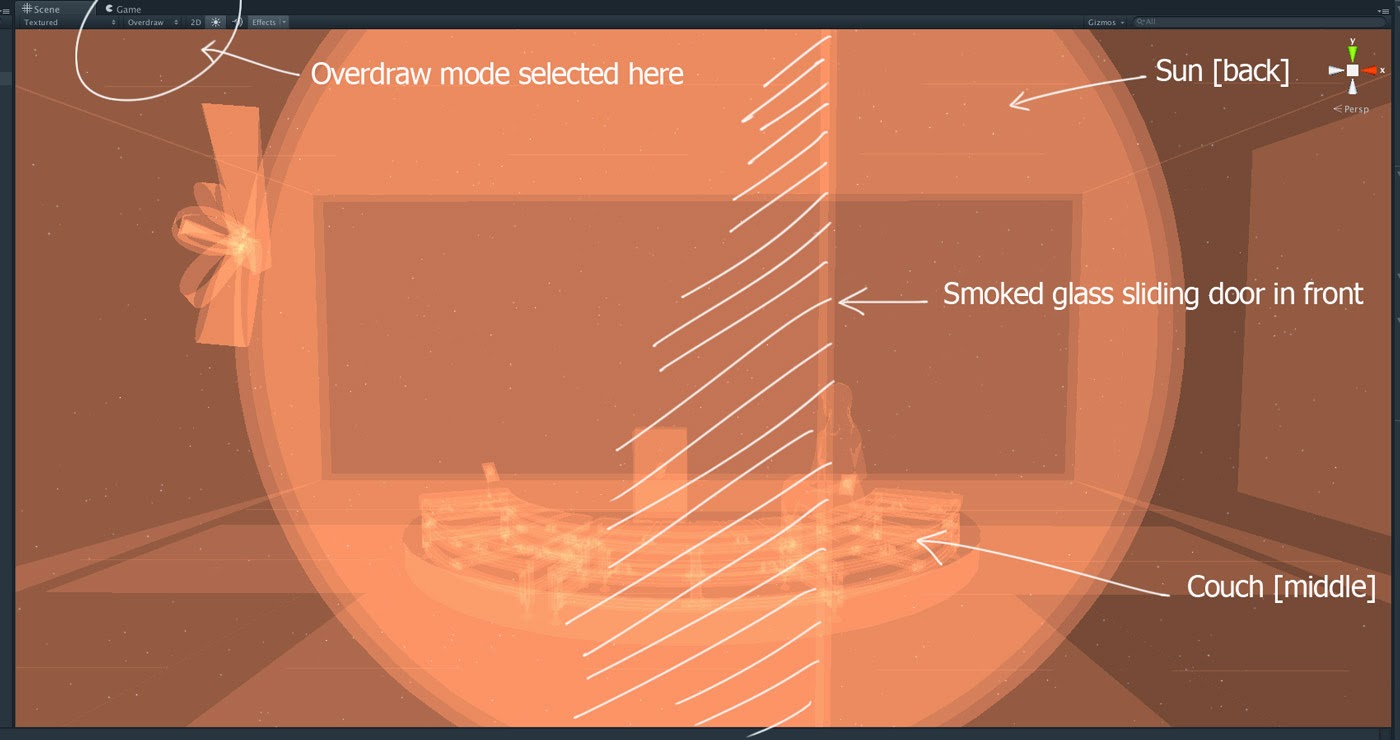

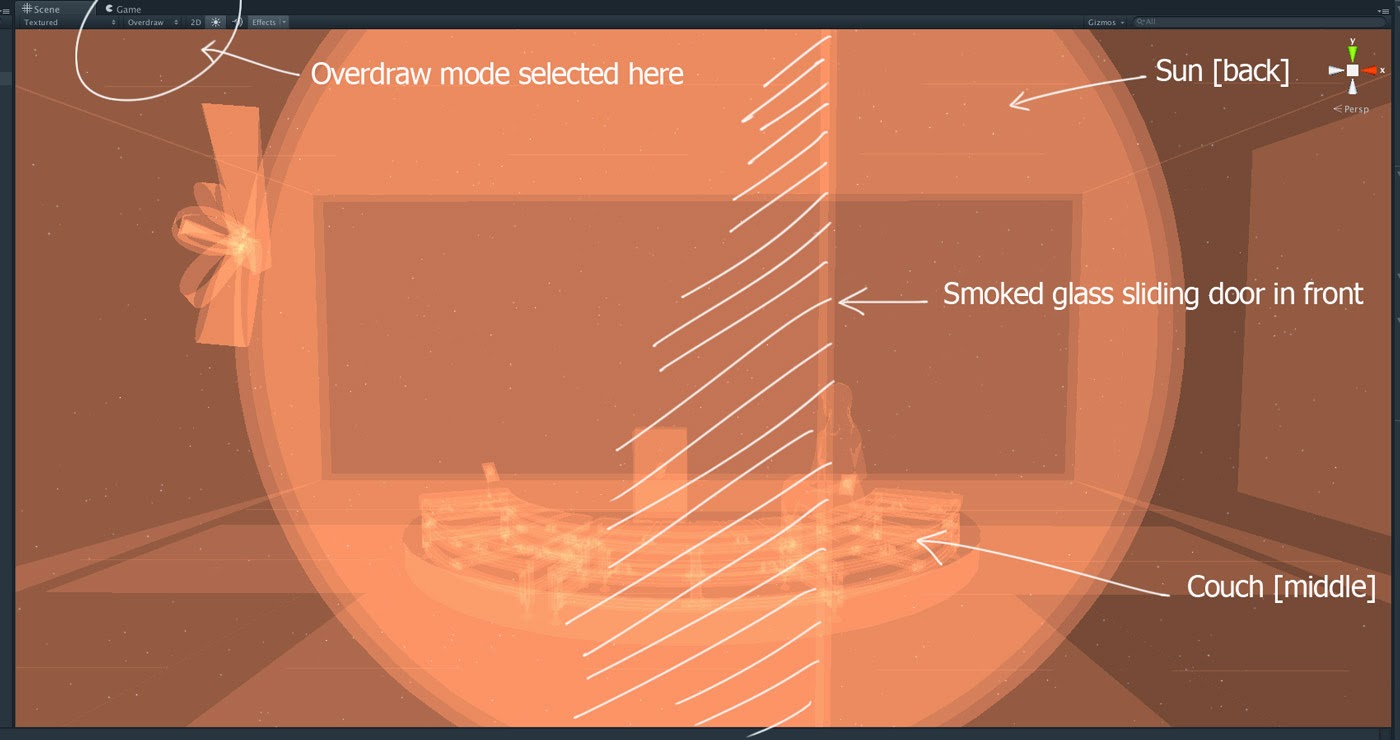

To draw the current frame, the GPU usually attempts to draw the objects in the scene from the furthest object visible all the way up towards the front, or what is nearest to the camera. This is good because objects are sort of stacked logically and things appear in the correct order depth-wise, but this is also bad because things that might be hidden by other things are drawn unnecessarily, wasting GPU resources. This is the first problem. This unnecessary drawing is called 'overdraw' and in fact Unity has a viewport mode dedicated to showing you what's hiding behind other things:

|

| Unity's Overdraw viewport mode - How to know what is transparent and what is not though? |

Smart video game design attempts to minimise this by culling or removing objects that are hidden behind others so the GPU never needs to draw them. I'm not being that efficient yet however and just relying on not having too much stuff to draw. We're in space after all. This doesn't really impact the base rendering speed *too* much, but it's part of the overall problem.

The second part of the problem is that I'd asked Unity to draw in deferred rendering mode, where the geometry is processed in multiple passes to separate the jobs of drawing, lighting, texturing the scenes objects. In that lighting pass - and this is the main advantage of deferred rendering - many many lights can contribute to the scene's illumination cheaply as there's no texturing or other stuff done at that time, hence you can have lots of dynamic lights. But during the remaining texturing and compositing phase of the draw the transparent portions of the foreground objects must be considered when calculating the pixel sample values of the objects behind. And it's this continuous checking and sampling that destroy any speed gains made. The glass and the way it's transparency contributes to the appearance of the objects behind has to be considered at every step. In fact the closer you get to the glass, or the more of your view covered by it, the slower the GPU goes.

Forward rendering however, performs this object drawing from the back up to the front also, but it draws, lights and textures the scenes objects as it goes. Each object is rendered in it's entirety and then the next closest etc etc on and on until right in the front at the last millisecond the transparent glass is drawn over the top and BLAM that frame is done. It's this brute force approach that makes transparent foreground drawing feasible.

Sure enough, switching Unity to forward rendering instantly restored my frame rate, and allowed me to use the refractive shader which looks cool. It's a little over the top as glass on a spaceship this modern probably has no imperfections at all through which the background would be distorted but I think it adds to the ambience. And since my scene is mostly static, I was able to bake any shadow casting lights and extra stuff afforded by deferred rendering into the light-mapping. That's my problem solved!

Phew. If you're still here, congrats. Hope that wasn't too painful. And if I got this wrong, don't hesitate to tell me in the comments. Now for some screen shots of where the observation deck is at.

EDIT: Here's a great read/rant from a graphics programmer about the different rendering styles as well as a discussion on why it's hard to make mirrored surfaces in games: http://www.reddit.com/r/gaming/comments/12j1jn/

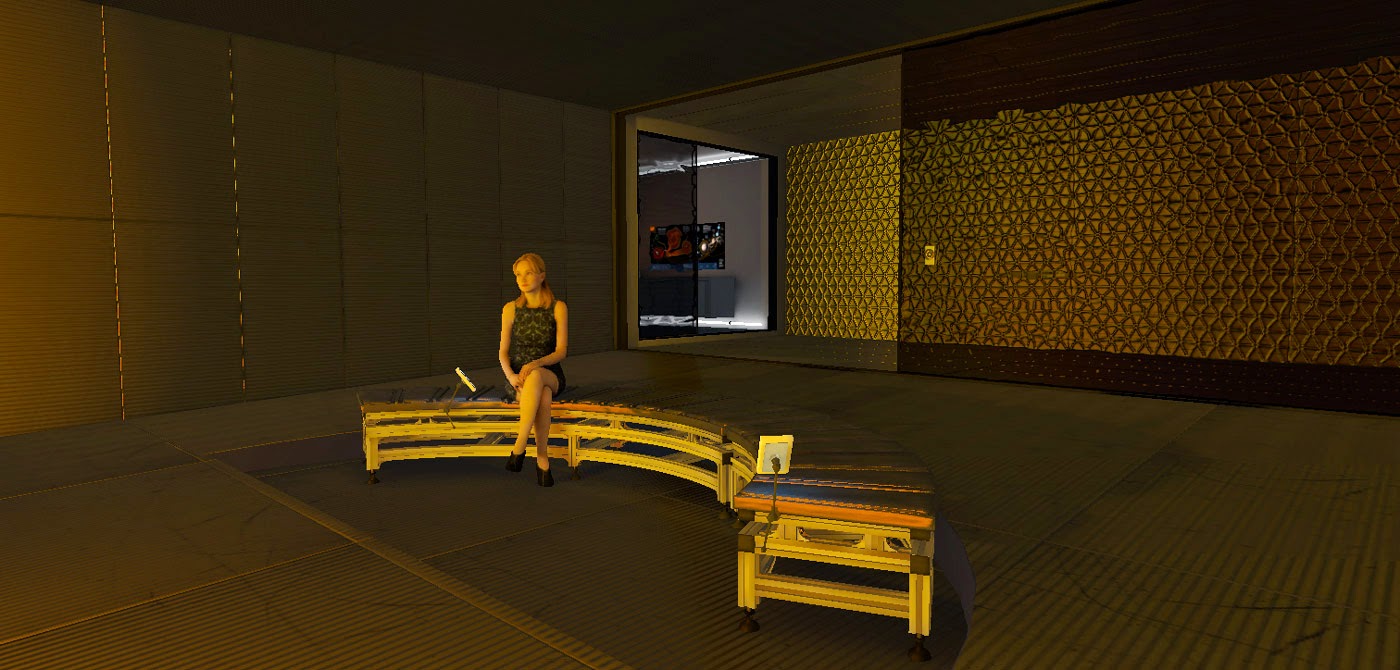

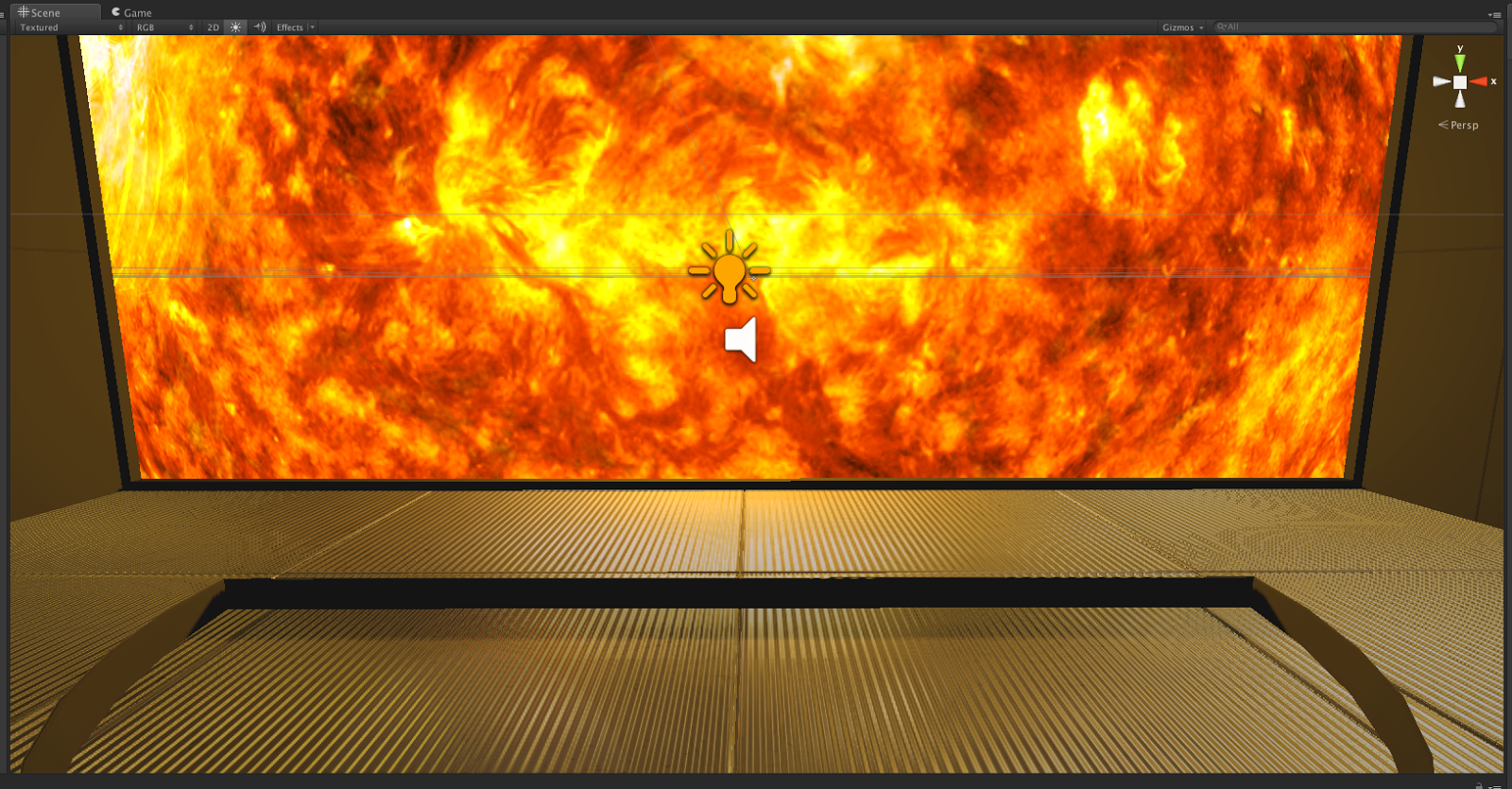

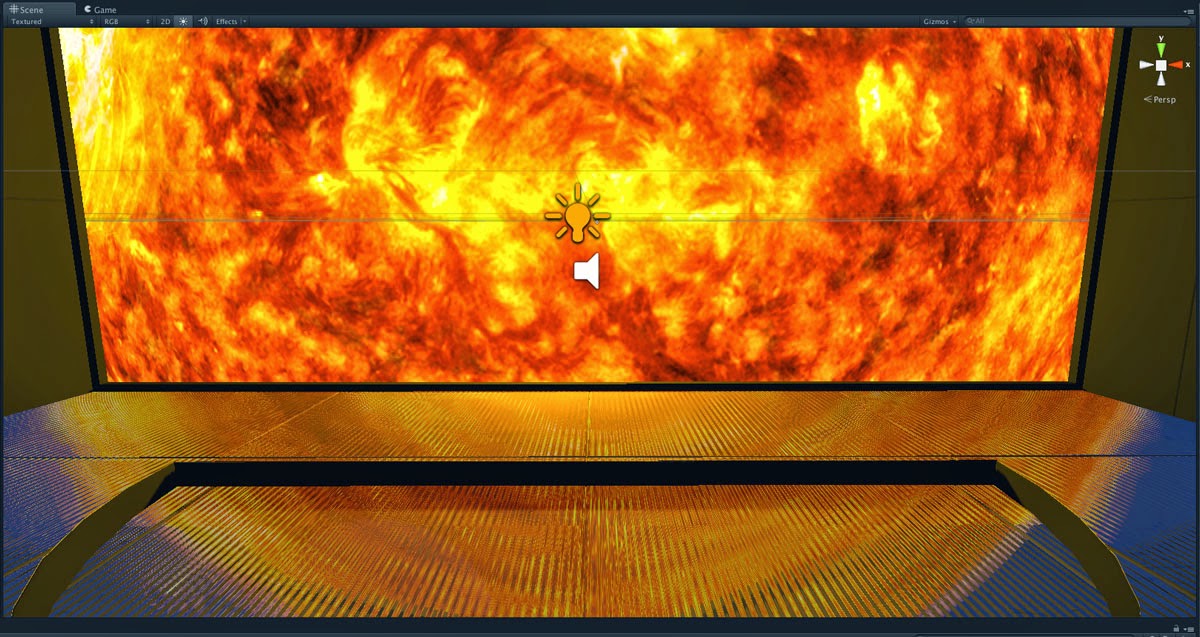

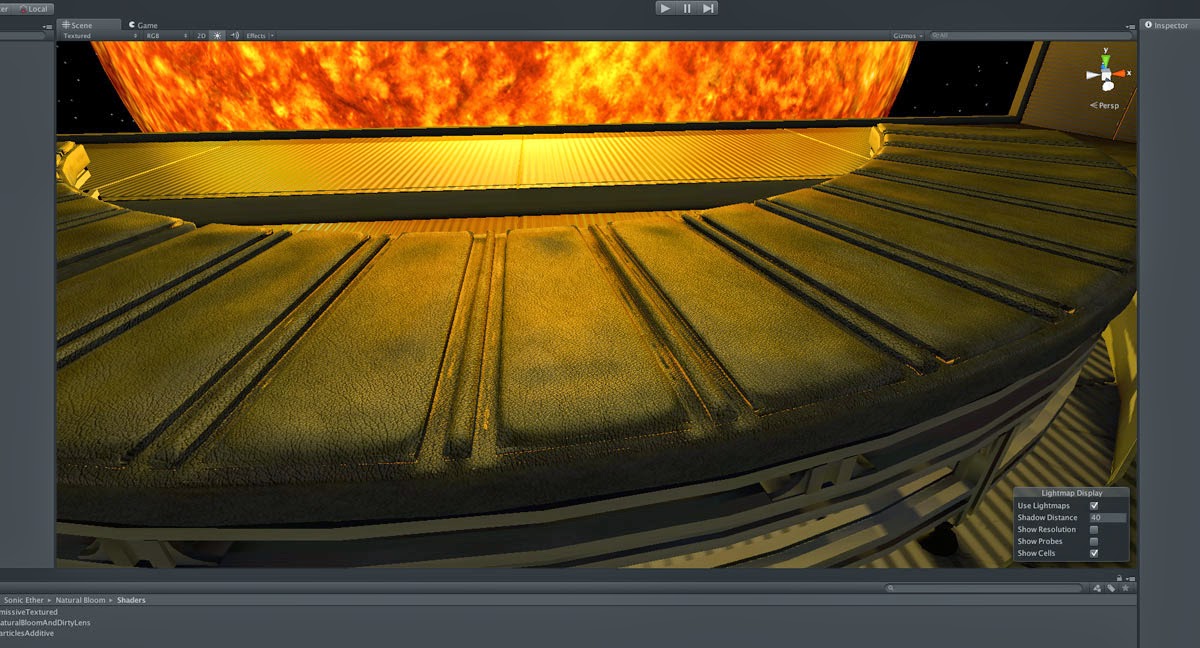

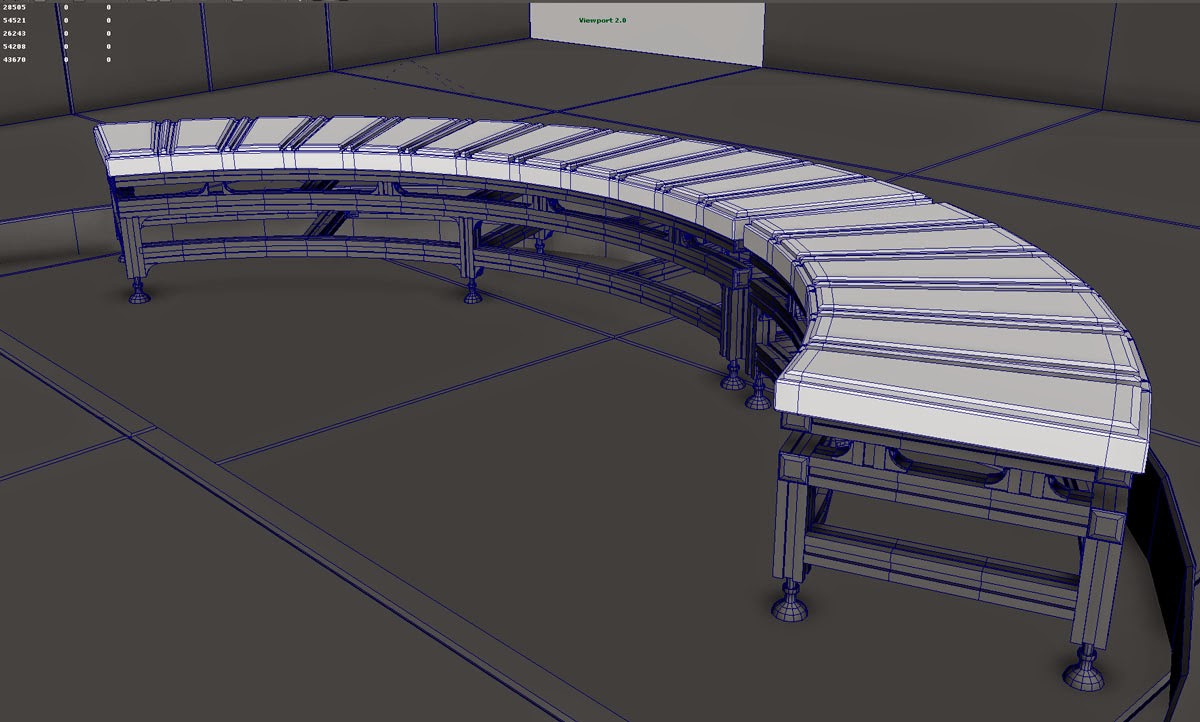

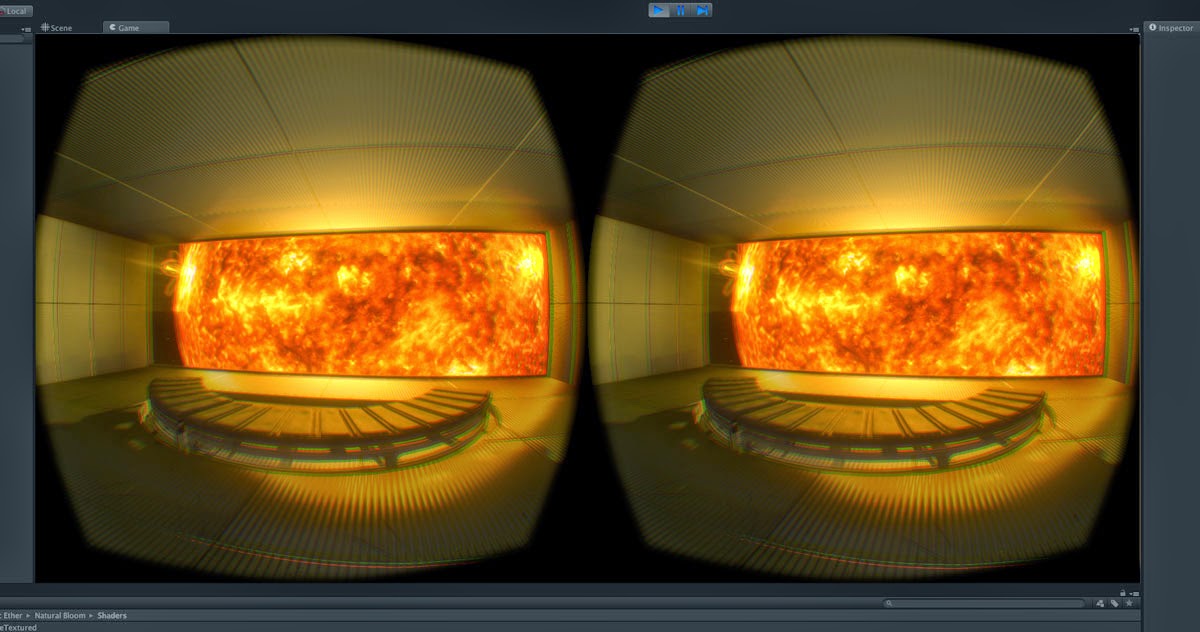

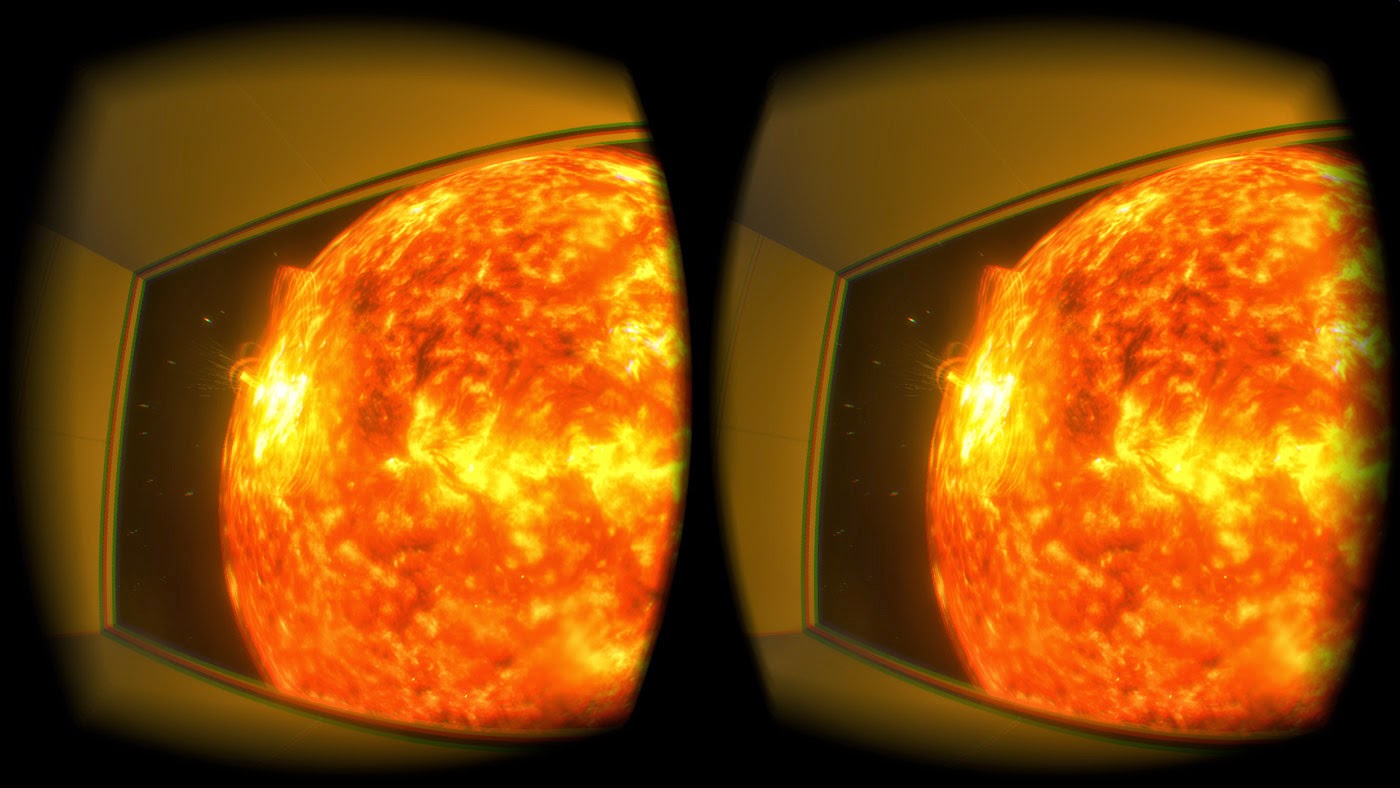

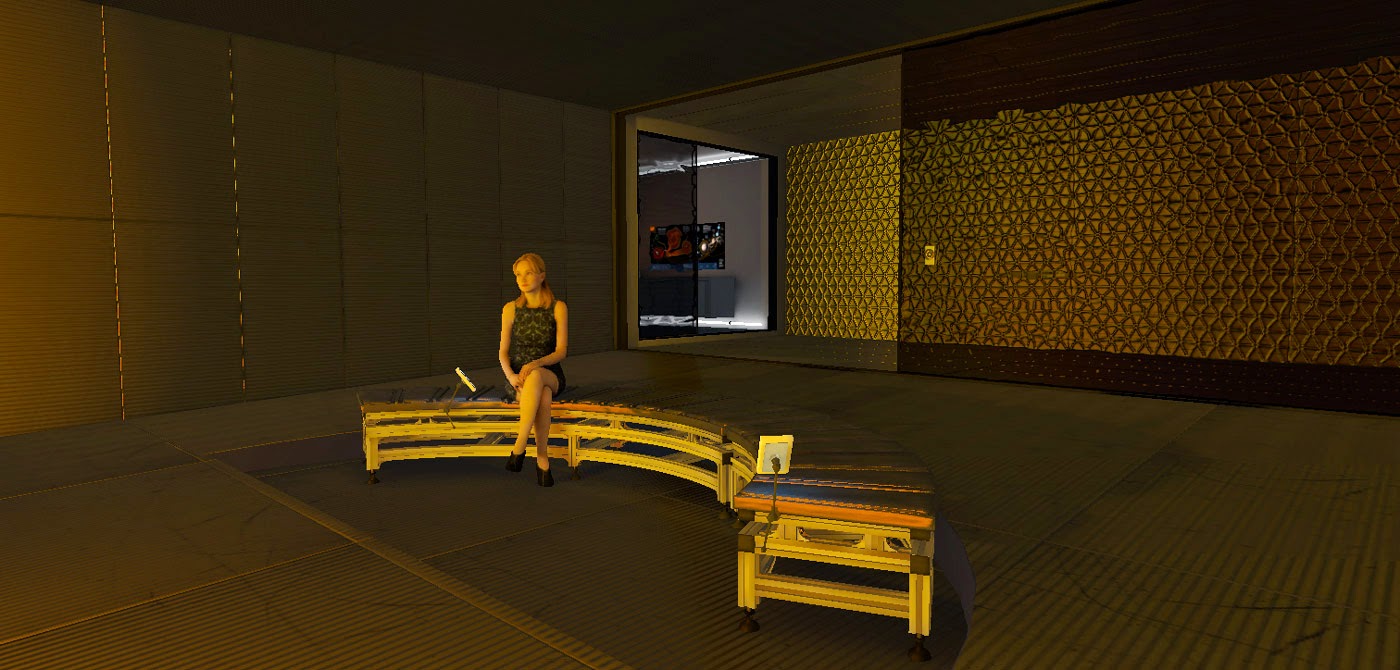

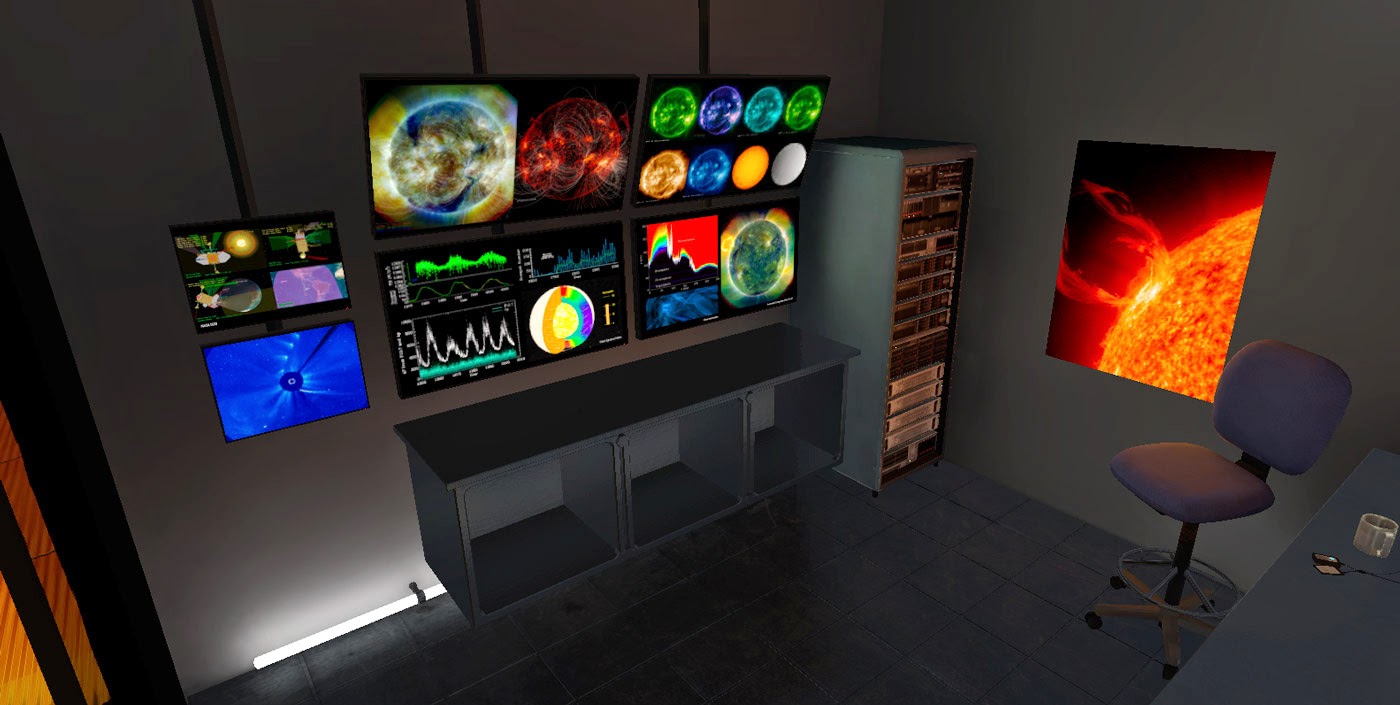

Please bear in mind the following images lack the optical effects present in the Rift's view which assist dramatically in creating the missing atmosphere. But the double imaging of the Rift screen make it tough to see what's there, so this is how you can see it for now:

|

| I found a free, high-quality model of a seated woman online to share the viewing couch. |

|

| Viewing deck exposure controls are present. |

|

| Rear corridor hatch and controls. |

|

| Rear wall normal-mapped panelling. |

|

| Science lab doorway. |

|

| Ergonomic chair. On a spaceship. Of *course* it's ergonomic. Who'd send an un-ergonomic chair into space!? |

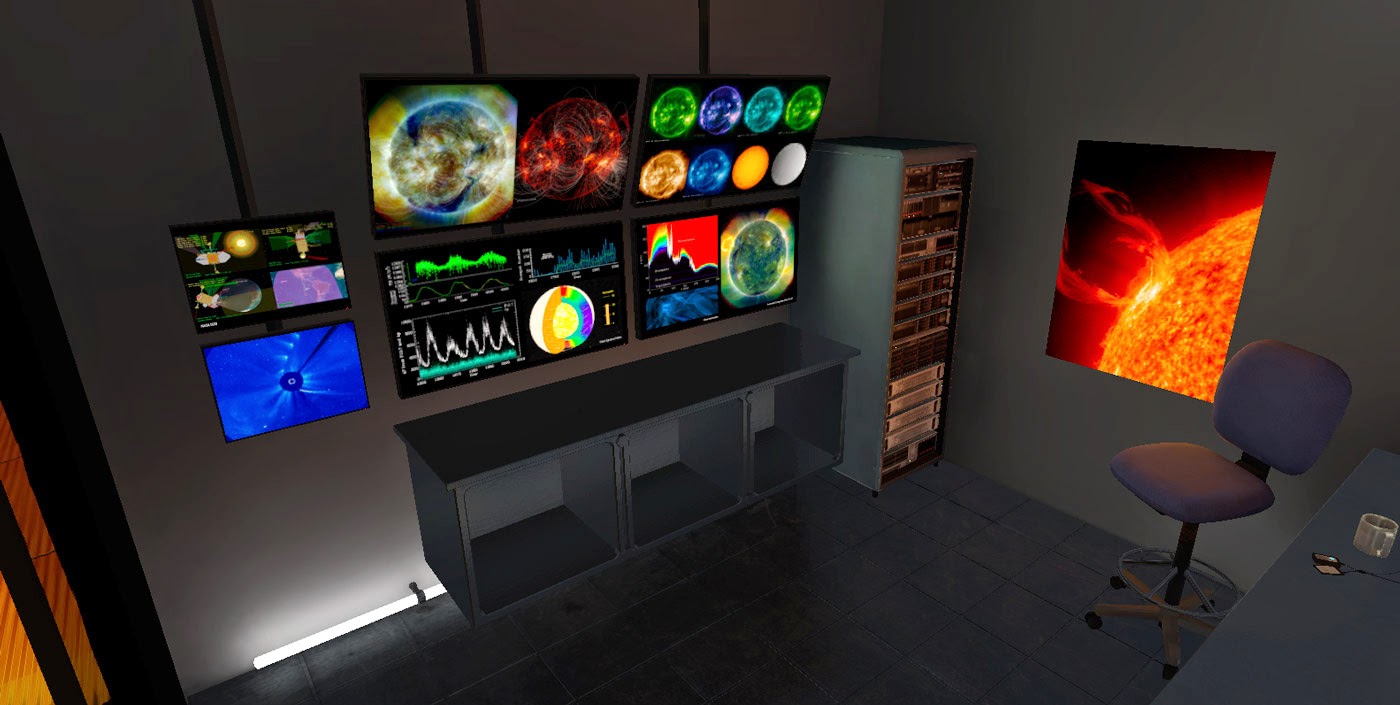

|

| Monitor bank, and [how exciting] a server rack. Blinking lights. |

And at this point my goal is to return to detailing and texturing the magnetic flares around the sun and then release the demo to the community. The aim of this demo is to recreate a location from the film as well as offer a relaxing Oculus demo where you can get a tan in VR. I'll probably be spending a chunk of the Wellington winter months in there attempting to offset seasonal affective disorder.

Till next time!

-j